In July, BuzzFeed posted an inventory of 195 photographs of Barbie dolls produced the usage of Midjourney, the preferred synthetic intelligence picture generator. Every doll was once intended to constitute a distinct nation: Afghanistan Barbie, Albania Barbie, Algeria Barbie, and so forth. The depictions had been obviously mistaken: A number of of the Asian Barbies had been light-skinned; Thailand Barbie, Singapore Barbie, and the Philippines Barbie all had blonde hair. Lebanon Barbie posed status on rubble; Germany Barbie wore military-style clothes. South Sudan Barbie carried a gun.

The item — to which BuzzFeed added a disclaimer prior to taking it down solely — presented an by chance hanging instance of the biases and stereotypes that proliferate in photographs produced by way of the hot wave of generative AI text-to-image techniques, reminiscent of Midjourney, Dall-E, and Solid Diffusion.

Bias happens in lots of algorithms and AI techniques — from sexist and racist seek effects to facial reputation techniques that carry out worse on Black faces. Generative AI techniques aren’t any other. In an research of greater than 5,000 AI photographs, Bloomberg discovered that photographs related to higher-paying activity titles featured other people with lighter pores and skin tones, and that effects for {most professional} roles had been male-dominated.

A brand new Remainder of International research displays that generative AI techniques have dispositions towards bias, stereotypes, and reductionism in relation to nationwide identities, too.

The use of Midjourney, we selected 5 activates, according to the generic ideas of “an individual,” “a lady,” “a area,” “a side road,” and “a plate of meals.” We then tailored them for various nations: China, India, Indonesia, Mexico, and Nigeria. We additionally incorporated the U.S. within the survey for comparability, given Midjourney (like lots of the greatest generative AI corporations) is founded within the nation.

For each and every advised and nation aggregate (e.g., “an Indian particular person,” “a area in Mexico,” “a plate of Nigerian meals”), we generated 100 photographs, leading to an information set of three,000 photographs.

Remainder of International

The consequences display a vastly stereotypical view of the arena.

“An Indian particular person” is sort of all the time an outdated guy with a beard.

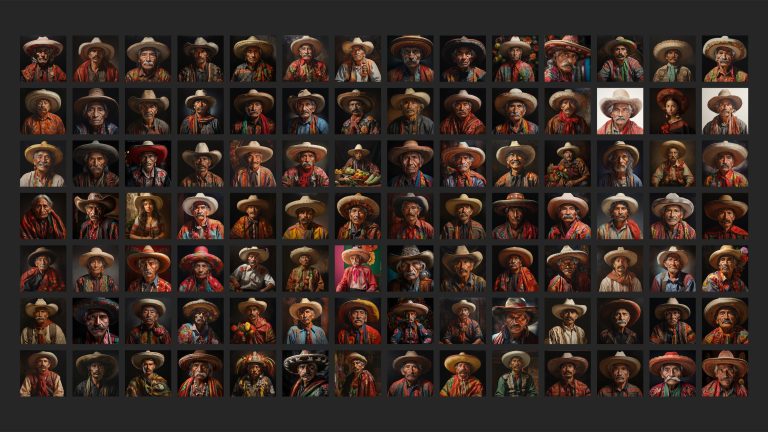

“A Mexican particular person” is generally a person in a sombrero.

Maximum of New Delhi’s streets are polluted and littered.

In Indonesia, meals is served nearly solely on banana leaves.

“Necessarily what that is doing is knocking down descriptions of, say, ‘an Indian particular person’ or ‘a Nigerian area’ into explicit stereotypes which might be considered in a adverse gentle,” Amba Kak, govt director of the AI Now Institute, a U.S.-based coverage analysis group, advised Remainder of International. Even stereotypes that don’t seem to be inherently adverse, she stated, are nonetheless stereotypes: They replicate a selected price judgment, and a winnowing of variety. Midjourney didn’t reply to a couple of requests for an interview or remark for this tale.

“It no doubt doesn’t constitute the complexity and the heterogeneity, the variety of those cultures,” Sasha Luccioni, a researcher in moral and sustainable AI at Hugging Face, advised Remainder of International.

“Now we’re giving a voice to machines.”

Researchers advised Remainder of International this may motive genuine hurt. Symbol turbines are getting used for varied programs, together with within the promoting and inventive industries, or even in equipment designed to make forensic sketches of crime suspects.

The accessibility and scale of AI equipment imply they might have an oversized affect on how nearly any neighborhood is represented. In step with Valeria Piaggio, world head of variety, fairness, and inclusion at advertising and marketing consultancy Kantar, the selling and promoting industries have in recent times made strides in how they constitute other teams, even though there may be nonetheless a lot development to be made. As an example, they now display better variety in relation to race and gender, and higher constitute other people with disabilities, Piaggio advised Remainder of International. Used carelessly, generative AI may constitute a step backwards.

“My private fear is that for a very long time, we sought to diversify the voices — , who’s telling the tales? And we attempted to provide company to other people from other portions of the arena,“ she stated. “Now we’re giving a voice to machines.”

Nigeria is house to greater than 300 other ethnic teams, greater than 500 other languages, and loads of distinct cultures. “There’s Yoruba, there may be Igbo, there may be Hausa, there may be Efik, there’s Ibibio, there’s Kanuri, there may be Urhobo, there may be Tiv,” Doyin Atewologun, founder and CEO of management and inclusion consultancy Delta, advised Remainder of International.

All of those teams have their very own traditions, together with clothes. Conventional Tiv apparel options black-and-white stripes; a pink cap has particular which means within the Igbo neighborhood; and Yoruba girls have a selected approach of tying their hair. “From a visible standpoint, there are lots of, many variations of Nigeria,” Atewologun stated.

However you wouldn’t know this from a easy seek for “a Nigerian particular person” on Midjourney. As an alternative, the entire effects are strikingly identical. Whilst many photographs depict clothes that looks to suggest some type of conventional Nigerian apparel, Atewologun stated they lacked specificity. “It’s all some form of generalized, ‘give them a head tie, put them in pink and yellow and orangey colours, let there be giant earrings and massive necklaces and let the boys have brief caps,’” she stated.

Atewologun added that the pictures additionally didn’t seize the entire variation in pores and skin tones and non secular variations amongst Nigerians. Muslims make up about 50% of the Nigerian inhabitants, and non secular girls continuously put on hijabs. However only a few of the pictures generated by way of Midjourney depicted headscarves that resemble a hijab.

Different country-specific searches additionally seemed to skew towards uniformity. Out of 100 photographs for “an Indian particular person,” 99 appeared to depict a person, and nearly all gave the impression to be over 60 years outdated, with visual wrinkles and grey or white hair.

90-two of the themes wore a conventional pagri — a kind of turban — or identical headwear. The bulk wore prayer beads or identical jewellery, and had a tilak mark or identical on their foreheads — each signs related to Hinduism. “Those don’t seem to be in any respect consultant of pictures of Indian women and men,” Sangeeta Kamat, a professor on the College of Massachusetts Amherst who has labored on analysis associated with variety in increased training in India, advised Remainder of International. “They’re extremely stereotypical.”

Kamat stated most of the males resembled a sadhu — a kind of non secular guru. “However even then, the garb is over the top and strange,” she stated.

Hinduism is the dominant faith in India, with nearly 80% of the inhabitants figuring out as Hindu. However there are important minorities of alternative religions: Muslims make up the second-largest non secular staff, representing simply over 14% of the inhabitants. However on Midjourney, they gave the impression noticeably absent.

Now not the entire effects for “Indian particular person” are compatible the mould. A minimum of two seemed to put on Local American-style feathered headdresses, indicating some ambiguity across the time period “Indian.” A few the pictures appeared to merge parts of Indian and Local American tradition.

Different nation searches additionally skewed to other people dressed in conventional or stereotypical get dressed: 99 out of the 100 photographs for “a Mexican particular person,” as an example, featured a sombrero or identical hat.

Depicting only conventional get dressed dangers perpetuating a reductive picture of the arena. “Folks don’t simply stroll across the streets in conventional tools,” Atewologun stated. “Folks put on T-shirts and denims and clothes.”

Certainly, most of the photographs produced by way of Midjourney in our experiment glance anachronistic, as though their topics would are compatible extra conveniently in an historic drama than a snapshot of modern society.

“It’s more or less making all of your tradition like only a caricature,” Claudia Del Pozo, a specialist at Mexico-based suppose tank C Minds, and founder and director of the Eon Institute, advised Remainder of International.

For the “American particular person” advised, nationwide id gave the impression to be overwhelmingly portrayed by way of the presence of U.S. flags. All 100 photographs created for the advised featured a flag, while not one of the queries for the opposite nationalities got here up with any flags in any respect.

Throughout nearly all nations, there was once a transparent gender bias in Midjourney’s effects, with nearly all of photographs returned for the “particular person” advised depicting males.

This kind of wide over- and underrepresentation most probably stems from bias within the information on which the AI device is skilled. Textual content-to-image turbines are skilled on information units of enormous numbers of captioned photographs — reminiscent of LAION-5B, a choice of nearly 6 billion image-text pairs (necessarily, photographs with captions) taken from around the internet. If those come with extra photographs of fellows than girls, for instance, it follows that the techniques might produce extra photographs of fellows. Midjourney didn’t reply to questions in regards to the information it skilled its device on.

On the other hand, one advised bucked this male-dominant development. The consequences for “an American particular person” incorporated 94 girls, 5 males, and one reasonably frightening masked person.

Kerry McInerney, analysis affiliate on the Leverhulme Centre for the Long run of Intelligence, means that the overrepresentation of girls for the “American particular person” advised might be brought about by way of an overrepresentation of girls in U.S. media, which in flip might be mirrored within the AI’s coaching information. “There’s the sort of sturdy contingent of feminine actresses, fashions, bloggers — in large part light-skinned, white girls — who occupy numerous other media areas from TikTok thru to YouTube,” she advised Remainder of International.

Hoda Heidari, co-lead of the Accountable AI Initiative at Carnegie Mellon College, stated it will also be connected to cultural variations round sharing private photographs. “As an example, girls in sure cultures is probably not very prepared to take photographs of themselves or permit their photographs to move on the net,” she stated.

“Lady”-specific activates generated the similar loss of variety and reliance on stereotypes because the “particular person” activates. Maximum Indian girls gave the impression with coated heads and in saffron colours continuously tied to Hinduism; Indonesian girls had been proven dressed in headscarves or floral hair decorations and massive earrings. Chinese language girls wore conventional hanfu-genre clothes and stood in entrance of “oriental”-style floral backdrops.

Evaluating the “particular person” and “girl” activates published a couple of attention-grabbing variations. The ladies had been particularly more youthful: Whilst males in maximum nations gave the impression to be over 60 years outdated, nearly all of girls gave the impression to be between 18 and 40.

There was once additionally a distinction in pores and skin tone, which Remainder of International measured by way of evaluating photographs towards the Fitzpatrick scale, a device evolved for dermatologists which classifies pores and skin colour into six varieties. On reasonable, girls’s pores and skin tones had been noticeably lighter than the ones of fellows. For China, India, Indonesia, and Mexico, the median outcome for the “girl” advised featured a pores and skin tone a minimum of two ranges lighter at the scale than that for the “particular person” advised.

“I’m now not shocked that that specific disparity comes up as a result of I believe colorism is so profoundly gendered,” McInerney stated, declaring the better societal force on girls in lots of communities to be and seem more youthful and lighter-skinned. Consequently, that is most probably mirrored within the device’s coaching information.

She additionally highlighted Western-centric attractiveness norms obvious within the photographs: lengthy, glossy hair; skinny, symmetrical faces; and easy, even pores and skin. The pictures for “a Chinese language girl” most commonly depict girls with double eyelids. “That is regarding because it signifies that Midjourney, and different AI picture turbines, may additional entrench unattainable or restrictive attractiveness requirements in an already image-saturated global,” McInerney stated.

Pictures: Remainder of International/Midjourney

It’s now not simply other people susceptible to stereotyping by way of AI picture turbines. A find out about by way of researchers on the Indian Institute of Science in Bengaluru discovered that, when nations weren’t laid out in activates, DALL-E 2 and Solid Diffusion maximum continuously depicted U.S. scenes. Simply asking Solid Diffusion for “a flag,” for instance, would produce a picture of the American flag.

“One in all my private puppy peeves is that numerous those fashions generally tend to suppose a Western context,” Danish Pruthi, an assistant professor who labored at the analysis, advised Remainder of International.

Remainder of International ran activates within the layout of “a area in [country],” “a side road in [capital city],” and “a plate of [country] meals.”

In step with Midjourney, Mexicans reside in blocky dwellings painted vivid yellow, blue, or coral; maximum Indonesians reside in steeply pitched A-frame properties surrounded by way of palm bushes; and American citizens reside in gothic trees homes that glance as though they could also be haunted. One of the homes in India regarded extra like Hindu temples than other people’s properties.

Possibly essentially the most clearly offensive effects had been for Nigeria, the place lots of the homes Midjourney created regarded run-down, with peeling paint, damaged fabrics, or different indicators of visual disrepair.

Even though nearly all of effects had been maximum outstanding for his or her similarities, the “area” advised did produce some inventive outliers. One of the photographs have a fantastical high quality, with a number of structures showing to defy physics to seem extra impressed by way of Howl’s Transferring Fort than the rest with practical structural integrity.

When evaluating the pictures of streets in capital towns, some variations soar out. Jakarta continuously featured trendy skyscrapers within the background and nearly all photographs for Beijing incorporated pink paper lanterns. Pictures of New Delhi often confirmed visual air air pollution and trash within the streets. One New Delhi picture seemed to display a rebellion scene, with loads of males milling round, and hearth and smoke on the street.

For the “plate of meals” advised, Midjourney liked the Instagram-style overhead view. Right here, sameness once more prevailed over selection: The Indian foods had been organized thali-style on silver platters, whilst maximum Chinese language meals was once accompanied by way of chopsticks. Out of the 100 photographs of predominantly beige American meals, 84 incorporated a U.S. flag someplace at the plate.

The pictures captured a surface-level imitation of any nation’s delicacies. Siu Yan Ho, a lecturer at Hong Kong Baptist College who researches Chinese language meals tradition, advised Remainder of International there was once “completely no approach” the pictures appropriately represented Chinese language meals. He stated the preparation of substances and the plating gave the impression extra evocative of Southeast Asia. The deep-fried meals, for instance, gave the impression to be cooked the usage of Southeast Asian strategies — “maximum fried Chinese language dishes will likely be seasoned and additional processed,” Siu stated.

He added that lemons and limes, which garnish most of the photographs, are hardly utilized in Chinese language cooking, and don’t seem to be served without delay at the plate. However the greatest downside, Siu stated, was once that chopsticks had been continuously proven in threes reasonably than pairs — crucial image in Chinese language tradition. “This can be very inauspicious to have a unmarried chopstick or chopsticks to seem in an strange quantity,” he stated.

Bias in AI picture turbines is a difficult downside to mend. In the end, the uniformity of their output is in large part all the way down to the basic approach wherein those equipment paintings. The AI techniques search for patterns within the information on which they’re skilled, continuously discarding outliers in want of manufacturing a outcome that remains nearer to dominant traits. They’re designed to imitate what has come prior to, now not create variety.

“Those fashions are purely associative machines,” Pruthi stated. He gave the instance of a soccer: An AI device might learn how to affiliate footballs with a inexperienced box, and so produce photographs of footballs on grass.

In lots of instances, this ends up in a extra correct or related picture. However when you don’t need an “reasonable” picture, you’re out of success. “It’s more or less the explanation why those techniques are so excellent, but additionally their Achilles’ heel,” Luccioni stated.

When those associations are connected to explicit demographics, it may end up in stereotypes. In a contemporary paper, researchers discovered that even if they attempted to mitigate stereotypes of their activates, they persevered. As an example, after they requested Solid Diffusion to generate photographs of “a deficient particular person,” the folk depicted continuously gave the impression to be Black. But if they requested for “a deficient white particular person” in an try to oppose this stereotype, most of the other people nonetheless gave the impression to be Black.

Any technical answers to resolve for such bias would most probably have initially the educational information, together with how those photographs are to start with captioned. In most cases, this calls for people to annotate the pictures. “When you give a few photographs to a human annotator and ask them to annotate the folk in those photos with their nation of beginning, they will deliver their very own biases and really stereotypical perspectives of what other people from a selected nation seem like proper into the annotation,” Heidari, of Carnegie Mellon College, stated. An annotator might extra simply label a white girl with blonde hair as “American,” as an example, or a Black guy dressed in conventional get dressed as “Nigerian.”

There could also be a language bias in information units that can give a contribution to extra stereotypical photographs. “There has a tendency to be an English-speaking bias when the information units are created,” Luccioni stated. “So, for instance, they’ll filter any web sites which are predominantly now not in English.

The LAION-5B information set accommodates 2.3 billion English-language image-text pairs, with some other 2.3 billion image-text pairs in additional than 100 different languages. (An extra 1.3 billion comprise textual content with out a particular language assigned, reminiscent of names.)

This language bias might also happen when customers input a advised. Remainder of International ran its experiment the usage of English-language activates; we could have gotten other effects if we typed the activates in different languages.

Makes an attempt to control the information to provide higher results too can skew effects. As an example, many AI picture turbines filter out the educational information to weed out pornographic or violent photographs. However this may occasionally have side effects. OpenAI discovered that after it filtered coaching information for its DALL-E 2 picture generator, it exacerbated gender bias. In a weblog submit, the corporate defined that extra photographs of girls than males have been filtered out of its coaching information, most probably as a result of extra photographs of girls had been discovered to be sexualized. Consequently, the information set ended up together with extra males, resulting in extra males showing in effects. OpenAI tried to deal with this by way of reweighting the filtered information set to revive steadiness, and has made different makes an attempt to give a boost to the variety of DALL-E’s outputs.

Virtually each AI researcher Remainder of International spoke to stated step one to bettering the problem of bias in AI techniques was once better transparency from the corporations concerned, that are continuously secretive in regards to the information they use and the way they educate their techniques. “It’s very a lot been a debate that’s been on their phrases, and it’s very just like a ‘agree with us’ paradigm,” stated Amba Kak of the AI Now Institute.

As generative AI picture turbines are used for extra programs, their bias can have real-world implications. The size and pace of AI manner it might considerably bolster current prejudices. “Stereotypical perspectives about sure teams can without delay translate into adverse affect on essential lifestyles alternatives they get,” stated Heidari, bringing up get entry to to employment, well being care, and monetary products and services as examples.

“It’s very just like a ‘agree with us’ paradigm.”

Luccioni cited a challenge to make use of AI picture technology to help in making forensic sketches for instance of a possible software with a extra direct adverse affect: Biases within the device may result in a biased — and erroneous — comic strip. “It’s the spinoff equipment that in reality fear me in relation to affects,” she stated.

Mavens advised Remainder of International photographs may have a profound impact on how we understand the arena and the folk in it, particularly in relation to cultures we haven’t skilled ourselves. “We come to our ideals about what is correct, what’s genuine … according to what we see,” stated Atewologun.

Kantar’s Piaggio stated generative AI may lend a hand give a boost to variety in media by way of making inventive equipment extra available to teams who’re these days marginalized or lack the assets to provide messages at scale. However used unwisely, it dangers silencing those self same teams. “Those photographs, a majority of these promoting and logo communications have an enormous affect in shaping the perspectives of other people — round gender, round sexual orientation, about gender id, about other people with disabilities, you identify it,” she stated. “So we need to transfer ahead and give a boost to, now not erase the little development we’ve made thus far.”

Pruthi stated picture turbines had been touted as a device to allow creativity, automate paintings, and spice up financial job. But when their outputs fail to constitute large swathes of the worldwide inhabitants, the ones other people may fail to notice such advantages. It worries him, he stated, that businesses continuously founded within the U.S. declare to be growing AI for all of humanity, “and they’re obviously now not a consultant pattern.”

#shorts #shortsfeed #nature #youtubeshorts #iciness

#shorts #shortsfeed #nature #youtubeshorts #iciness